I am huge fan of the sport of Mixed Martial Arts (MMA). So as a man of data, you could expect me to visit the Ultimate Fighting Championship's (UFC) dedicated website for statistics, ufcstats.com, quite frequently. With that, I decided to create this simple data pipeline to set up an environment for my own data analysis.

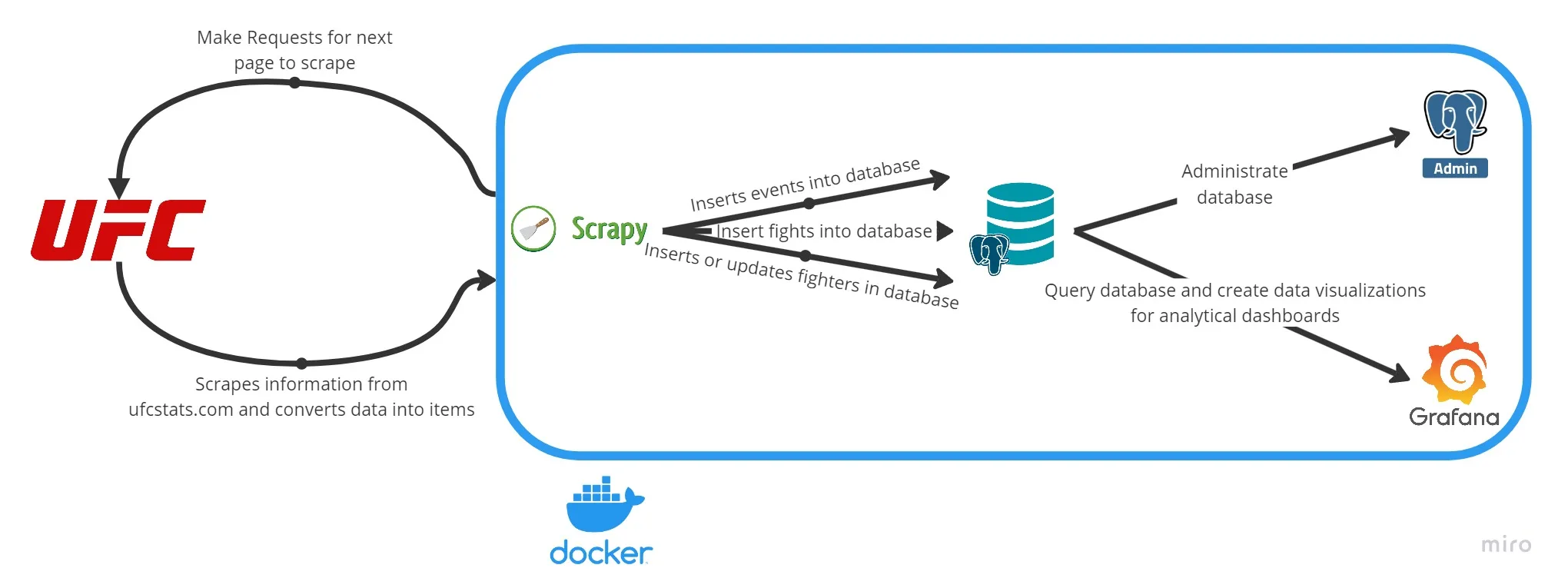

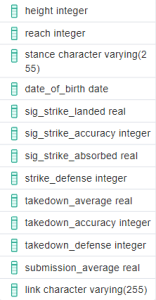

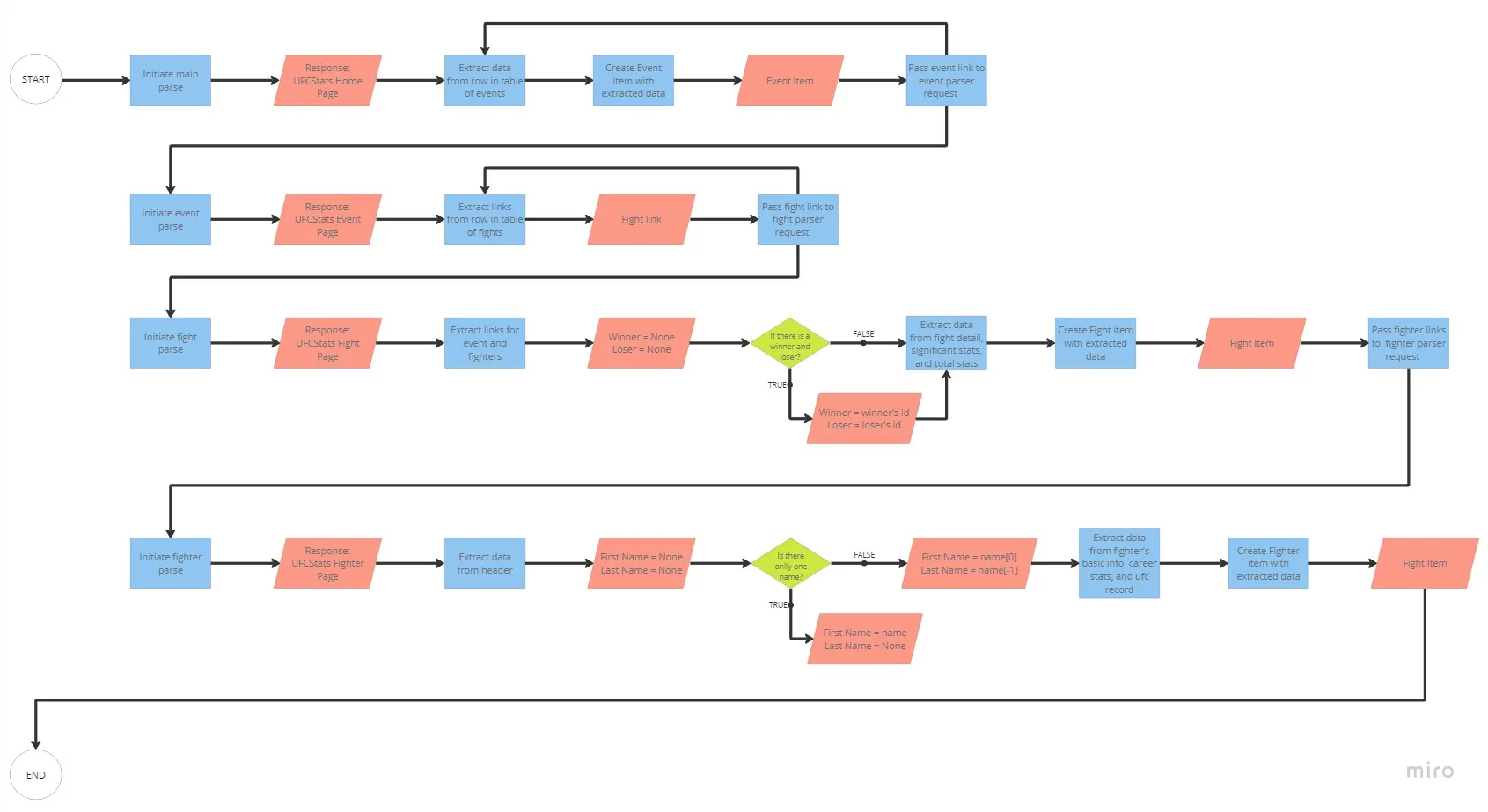

The flow of this pipeline is quite simple. We have a single data source and that is the ufcstats.com website. We use Scrapy to scrape the data from the website. We have three objects derivative to Scrapy’s Items class to create from this data: Events, Fights, and Fighters. As we are parsing pages and creating these objects, we collect links to pass later for further parsing. These items are then loaded into our PostgreSQL database upon being processed. We can then use our PGAdmin server to administer the database and our Grafana server to create visualizations of the data for analysis. This pipeline is activated every Sunday at 1:30am EST through Cron following the absolute latest time a UFC event should end.

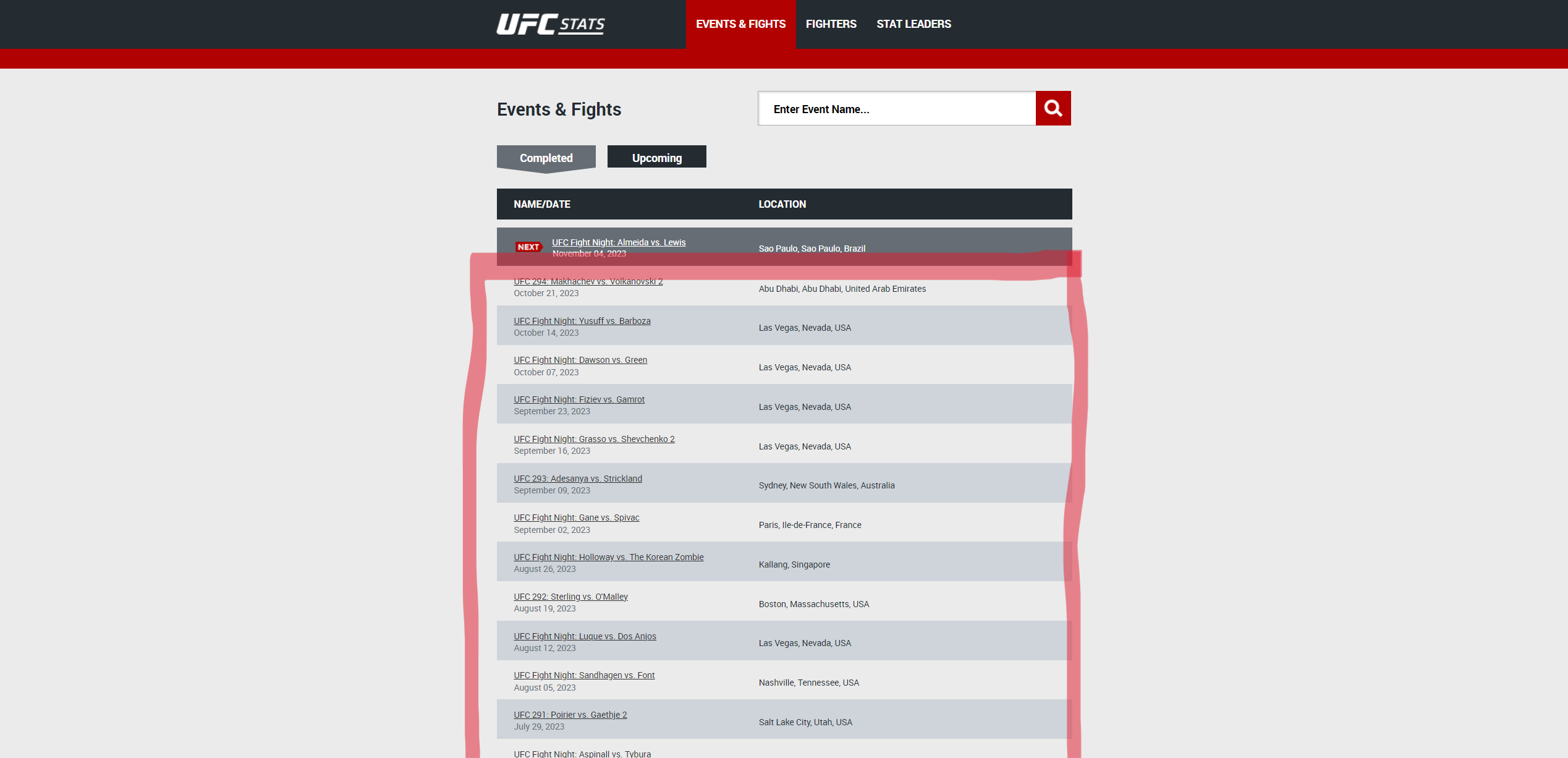

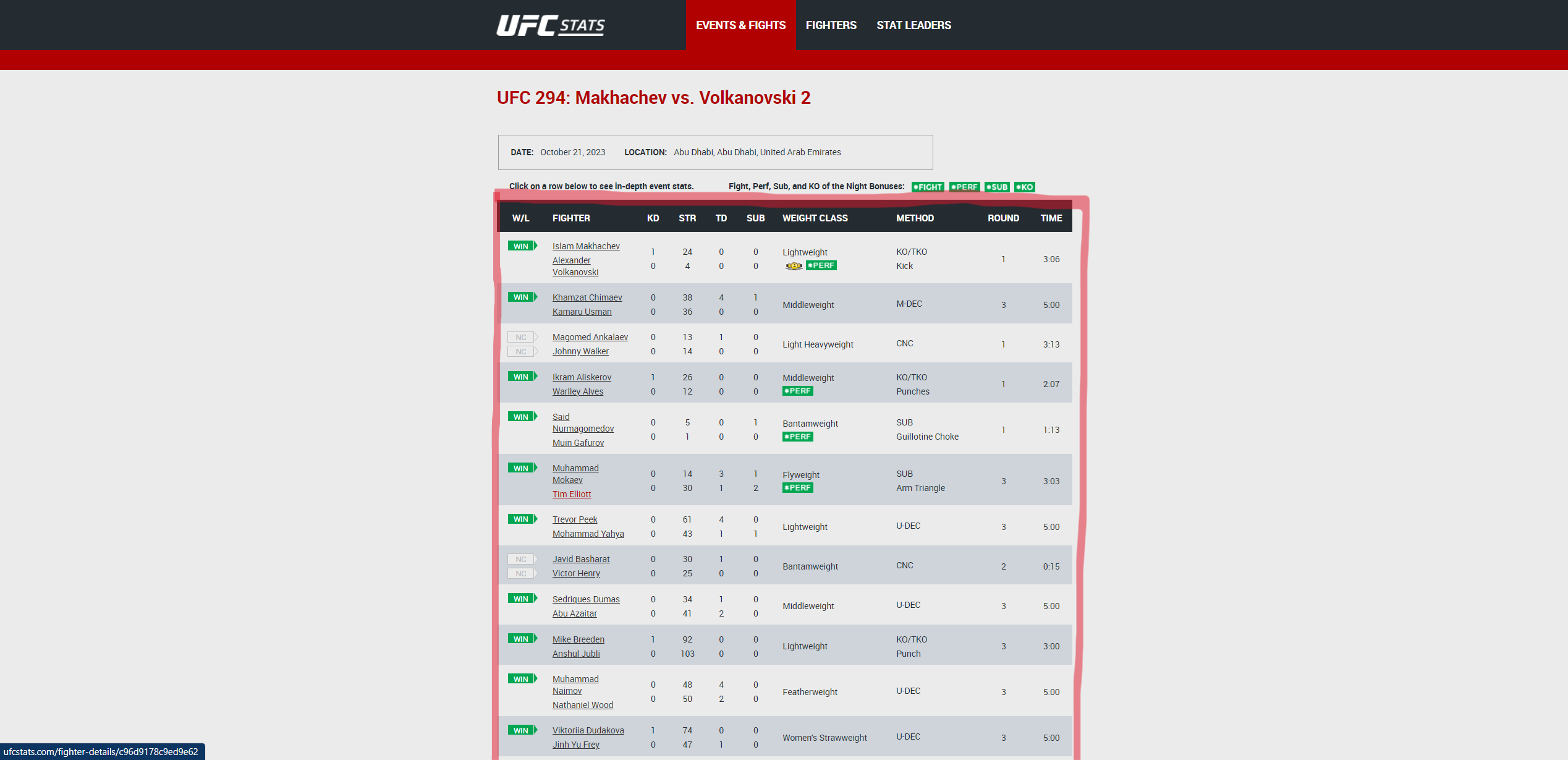

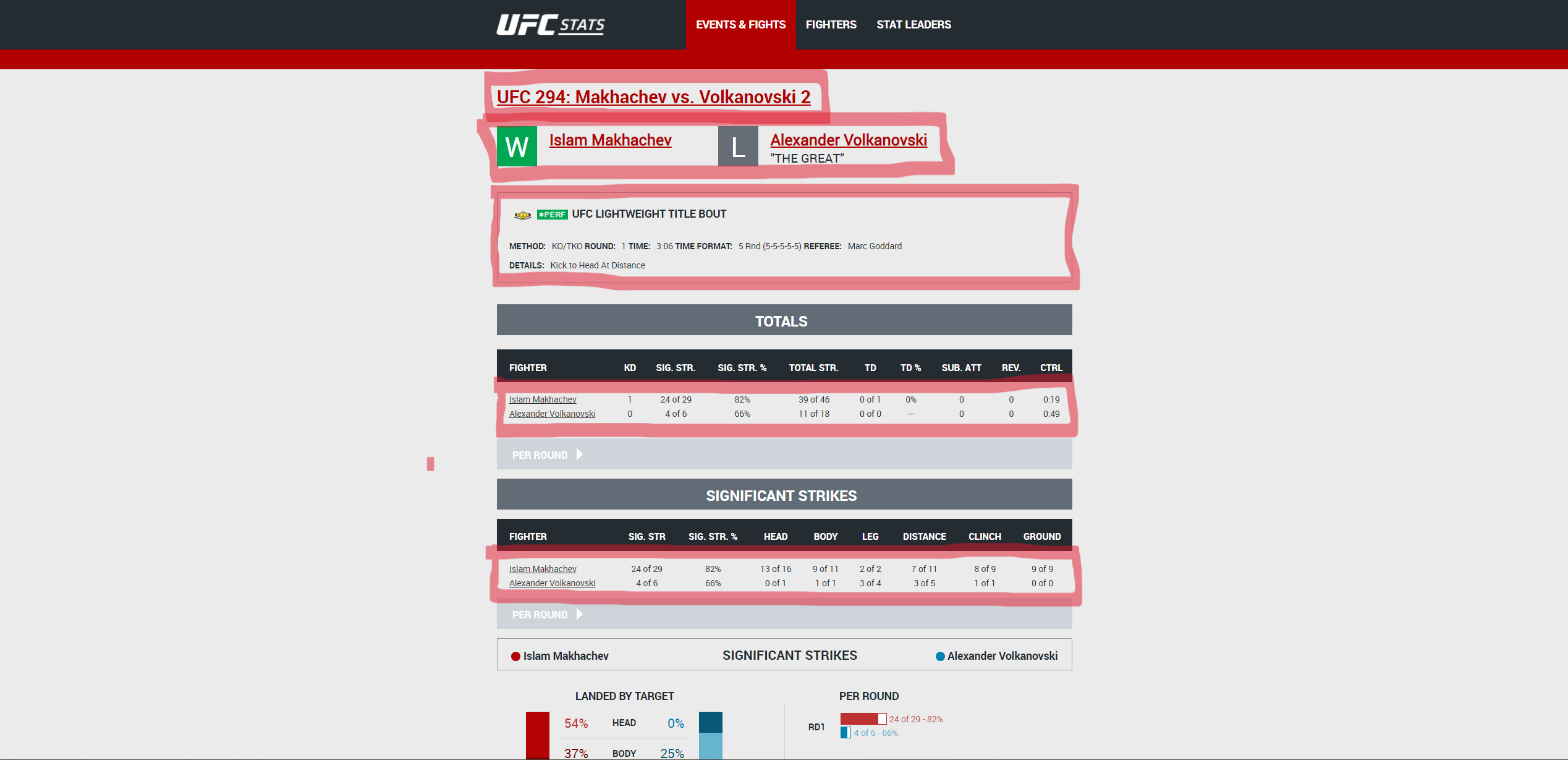

There are four different types of pages on this website we are concerned about:

The home page is the starting point for our project. This lists all of the events the UFC has hosted. The site gives us the option for all the results to be displayed in one page so pagination is not an issue.

This page includes information on an event and a list of all the fights that occurred with a summarization of the details.

This page contains basic information about a fight and in-depth statistics. This is the most data-rich page on the site.

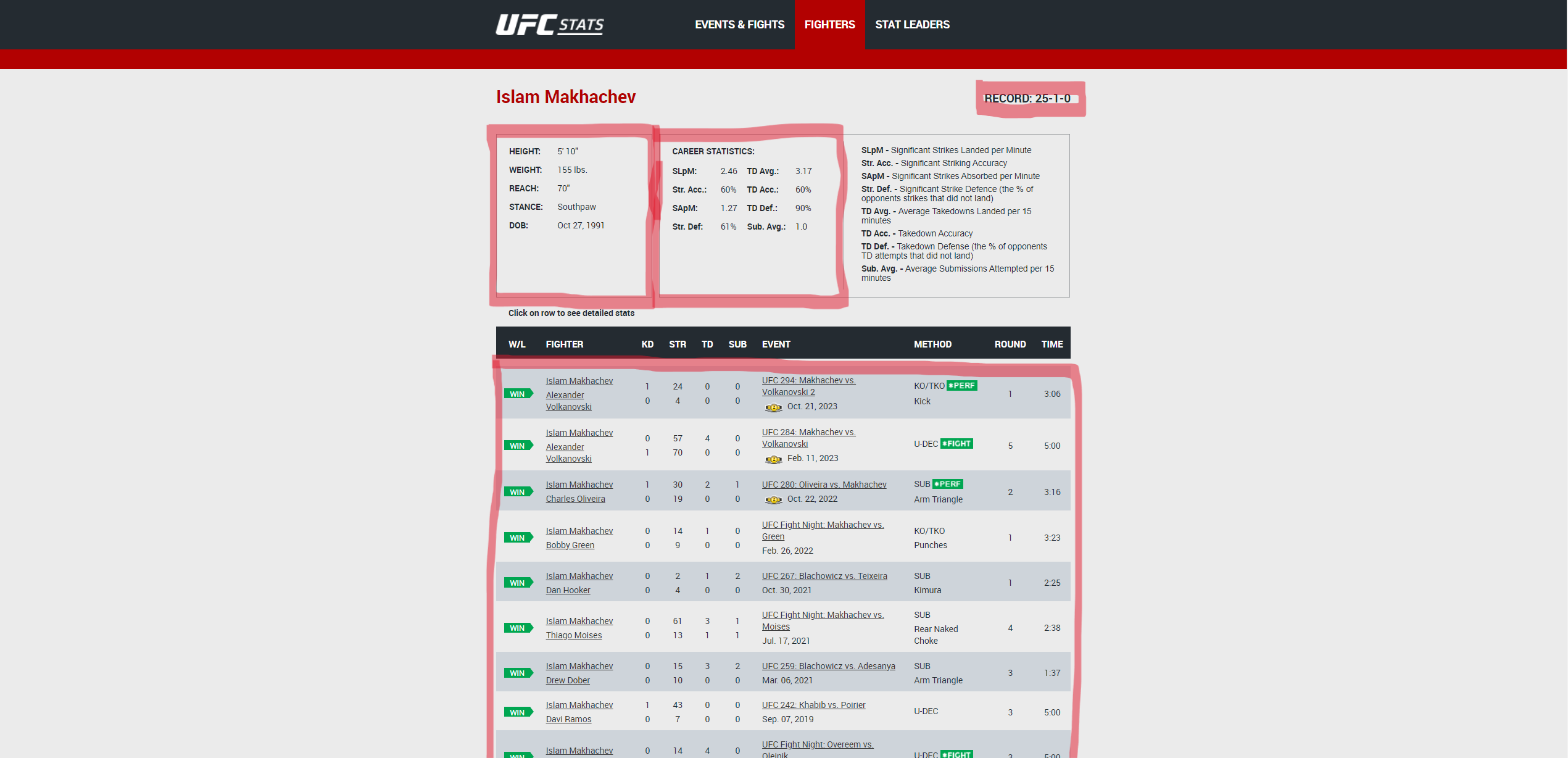

This is the final type of page we will be scraping. This contains basic information on a fighter, some career-long statistics, and a list of fights that the fighter participated in either promoted by the UFC or a promotion the UFC bought and owns.

1) Upon starting the program, we collect the necessary environment variables to establish our connection to the Postgres database.

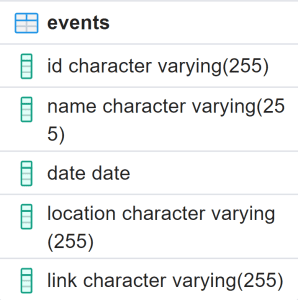

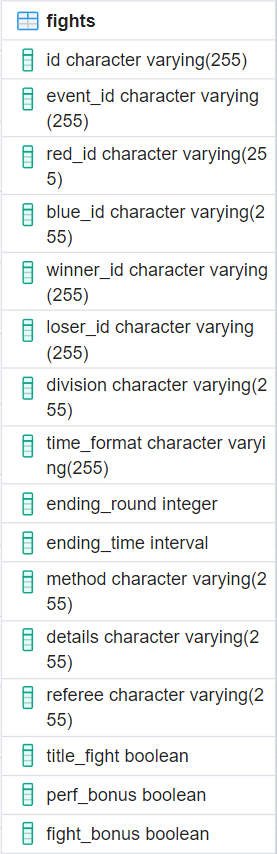

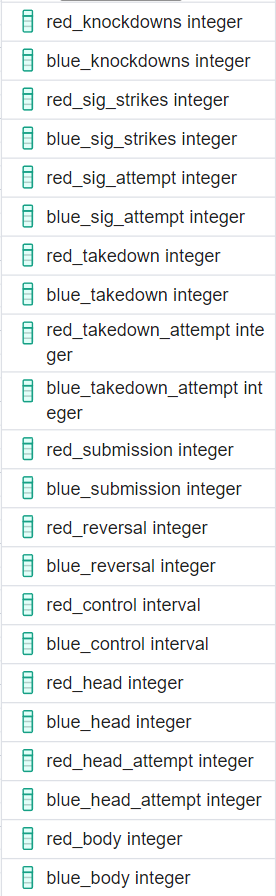

2) We execute SQL scripts to create the tables Events, Fights, and Fighters within the database, assuming they do not already exist.

# Database connection is established at the initialization of the pipeline

load_dotenv()

hostname = os.environ.get('POSTGRES_HOST', "Hostname not found")

username = os.environ.get('POSTGRES_USER', "Username not found")

password = os.environ.get('POSTGRES_PASSWORD', "Password not found")

database = os.environ.get('POSTGRES_DB', "Database name not found")

port = os.environ.get('POSTGRES_PORT', "Port not found")

logging.debug("Connecting to database...")

try:

self.connection = psycopg.connect(host=hostname, user=username, password=password, dbname=database, port=port)

self.cursor = self.connection.cursor()

logging.info("Connected to database.")

except:

logging.error("Could not connect to database.")

raise

# Create tables if they don't exist

self.cursor.execute(open('stat_scrape/sql/create_events_table.sql', 'r').read())

self.cursor.execute(open('stat_scrape/sql/create_fights_table.sql', 'r').read())

self.cursor.execute(open('stat_scrape/sql/create_fighters_table.sql', 'r').read())

3) We open the spider needed to crawl ufcstats.com. The method used for this is a pre-established aspect of the Spider class, but we overwrite it so that we can pass the date of the last event in the database to the spider.

def open_spider(self, spider):

# The last event date is retrieved from the database.

# If the database is empty, the last event date is set to 1/1/1 so the entire site is scraped.

spider.last_event_date = date(1, 1, 1)

logging.debug("Getting last event date from database...")

if len(self.cursor.execute("SELECT * from events;").fetchall()) != 0:

spider.last_event_date = self.cursor.execute("SELECT MAX(date) FROM events;").fetchone()[0]

logging.debug(f"Last event date: {spider.last_event_date}")

4) The spider will execute, extract all of the necessary data, and create the necessary objects we will load into our database. We will walkthrough that process in the next section.

5) When the spider creates a new item, it is passed back to pipeline.py and we execute another overwritten method.

6) We execute different SQL INSERT statements for loading the data into the database based on what kind of item has been passed.

7) In the case of a fighter, if the fighter already exists in the database, we execute a SQL UPDATE statement instead and update select fields of that fighter in the database.

process_item(self, item, spider):

if isinstance(item, Event):

logging.debug("Inserting event into database...")

try:

self.cursor.execute(open('stat_scrape/sql/insert_into_events.sql', 'r').read(),

(item.id,

...,

item.link))

logging.debug("Inserted event into database.")

except Exception as e:

logging.error(f"Could not insert event into database: {e}")

raise

# If the item is a fight, it is inserted into the fights table

elif isinstance(item, Fight):

logging.debug("Inserting fight into database...")

try:

self.cursor.execute(open('stat_scrape/sql/insert_into_fights.sql', 'r').read(),

(item.id,

...,

item.link))

logging.debug("Inserted fight into database.")

except Exception as e:

logging.error(f"Could not insert fight into database: {e}")

raise

# If the item is a fighter, it updates the fighter if it already exists in the database, otherwise it inserts it

elif isinstance(item, Fighter):

if len(self.cursor.execute(open('stat_scrape/sql/select_fighters.sql', 'r').read(), (item.id,)).fetchall()) != 0:

logging.debug("Fighter already in database. Updating...")

try:

self.cursor.execute(open('stat_scrape/sql/update_fighters.sql', 'r').read(),

(item.first_name,

...,

item.id))

logging.debug("Updated fighter in database.")

except Exception as e:

logging.error(f"Could not update fighter in database: {e}")

raise

else:

logging.debug("Inserting fighter into database...")

try:

self.cursor.execute(open('stat_scrape/sql/insert_into_fighters.sql', 'r').read(),

(item.id,

...,

item.link))

logging.debug("Inserted fighter into database.")

except Exception as e:

logging.error(f"Could not insert fighter into database: {e}")

raise

self.connection.commit()

return item

With that, we close the spider, our database connection, and the scraping program ends.

def close_spider(self, spider):

self.cursor.close()

self.connection.close()

1) We initiate the main parse method, targeting the homepage containing a table of all UFC events. We begin a loop that iterates through all of the events in the table extracting the data.

2) We create the Event item with the extracted data.

3) We check to see if that Event has the same or earlier date than the latest event in our database. If yes, than the loop is broken and the main parse finishes.

4) We send a request to the next parse method with links to the individual event pages.

def parse(self, response):

source_date_format = "%B %d, %Y"

logging.debug(f"Parsing events starting from {self.last_event_date}")

for row in response.xpath(

'//*[@class="b-statistics__table-events"]//tbody//tr'

)[2:]:

content = row.xpath("td[1]//text() | td[1]//@href").getall()

event = Event(

id=content[2].split("/")[-1],

name=" ".join(content[3].split()),

date=datetime.strptime(" ".join(content[5].split()), source_date_format),

location=" ".join(row.xpath("td[2]//text()").getall()[0].split()),

link=content[2],

)

if event.date.date() <= self.last_event_date:

logging.debug("Reached last event.")

break

yield event

yield Request(event.link, callback=self.parse_event, dont_filter=False)

5) We now process the individual event pages. With this parse method, we only extract the links for the individual fights that occurred at the event.

6) We send a request to the next parse method with links to the individual fight pages.

# The second parse is for the individual event pages.

# Collects the links for the individual fight pages for the next parse.

def parse_event(self, response):

for row in response.xpath(

'//*[@class="b-fight-details__table b-fight-details__table_style_margin-top b-fight-details__table_type_event-details js-fight-table"]//tbody//tr'

):

fight_link = row.xpath("td//a/@href").getall()[0]

yield Request(fight_link, callback=self.parse_fight, dont_filter=False)

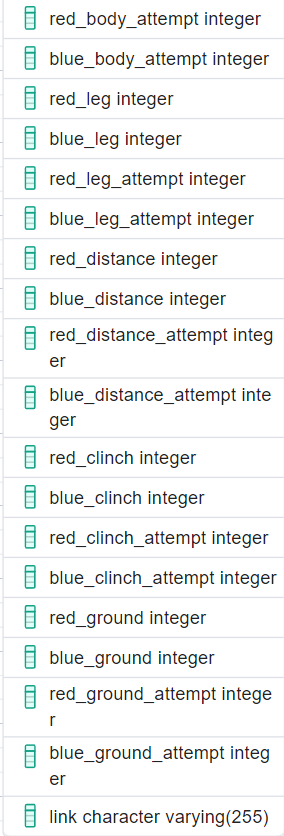

7) With the fight parse method, we separately extract different sections of the individual fight pages. These require cleaning and formatting before item creation.

8) We create the fight item with the extracted data.

10) We send a request to the next parse method with links to the individual fighter pages.

def parse_fight(self, response):

event_link = response.xpath('//*[@class="b-content__title"]//a/@href').get()

fighter_links = response.xpath(

'//*[@class="b-fight-details__person"]//a/@href'

).getall()

# The fight has three images indicating if it was a title fight, performance of the night, and/or fight of the night.

special_marks = response.xpath(

'//*[@class="b-fight-details__fight-head"]//img/@src'

).getall()

# The winner and loser are indicated by a "W" or "L" in the fight details.

# In the case of a draw or a no contest, the winner and loser are both None.

winner = None

loser = None

for fighter in response.xpath(

'//*[@class="b-fight-details__persons clearfix"]/div'

):

if re.search("W", "".join(fighter.xpath("i//text()").get().split())):

winner = (

fighter.xpath(

'*[@class="b-fight-details__person-text"]/h3/a/@href'

)

.get()

.split('/')[-1]

)

elif re.search("L", "".join(fighter.xpath("i//text()").get().split())):

loser = (

fighter.xpath(

'*[@class="b-fight-details__person-text"]/h3/a/@href'

)

.get()

.split('/')[-1]

)

fight_division = ' '.join(response.xpath(

'normalize-space(//*[@class="b-fight-details__fight-head"])'

).get().split()[:-1])

fight_details = clean_text(

response, '//*[@class="b-fight-details__content"]//text()'

)

fight_stats = response.xpath('//*[@class="b-fight-details__table-body"]/tr')

total_stats = clean_text(fight_stats[0], ("td//text()"))

significant_stats = clean_text(

fight_stats[int(len(fight_stats) / 2)], ("td//text()")

)

# The fight stats are seperated into two tables, one for total strikes and one for significant strikes.

# They are also seperated into two tables for each fighter, one for the red corner and one for blue corner.

fight = Fight(

id=response.url.split('/')[-1],

event_id=event_link.split('/')[-1],

red_id=fighter_links[0].split('/')[-1],

blue_id=fighter_links[1].split('/')[-1],

winner=winner,

loser=loser,

division=fight_division,

time_format=fight_details[7],

ending_round=int(fight_details[3]),

ending_time=time_clean(fight_details[5]),

method=fight_details[1],

details=" ".join(fight_details[11:]),

referee=fight_details[9],

title_fight="http://1e49bc5171d173577ecd-1323f4090557a33db01577564f60846c.r80.cf1.rackcdn.com/belt.png" in special_marks,

perf_bonus="http://1e49bc5171d173577ecd-1323f4090557a33db01577564f60846c.r80.cf1.rackcdn.com/perf.png" in special_marks,

fotn_bonus="http://1e49bc5171d173577ecd-1323f4090557a33db01577564f60846c.r80.cf1.rackcdn.com/fight.png" in special_marks,

red_kd=int(total_stats[2]),

blue_kd=int(total_stats[3]),

red_sig_strike=int(total_stats[4].split(" of ")[0]),

blue_sig_strike=int(total_stats[5].split(" of ")[0]),

red_sig_attempt=int(total_stats[4].split(" of ")[1]),

blue_sig_attempt=int(total_stats[5].split(" of ")[1]),

red_takedown=int(total_stats[10].split(" of ")[0]),

blue_takedown=int(total_stats[11].split(" of ")[0]),

red_takedown_attempt=int(total_stats[10].split(" of ")[1]),

blue_takedown_attempt=int(total_stats[11].split(" of ")[1]),

red_sub=int(total_stats[14]),

blue_sub=int(total_stats[15]),

red_reversal=int(total_stats[16]),

blue_reversal=int(total_stats[17]),

red_control=time_clean(total_stats[18]),

blue_control=time_clean(total_stats[19]),

red_head=int(significant_stats[6].split(" of ")[0]),

blue_head=int(significant_stats[7].split(" of ")[0]),

red_head_attempt=int(significant_stats[6].split(" of ")[1]),

blue_head_attempt=int(significant_stats[7].split(" of ")[1]),

red_body=int(significant_stats[8].split(" of ")[0]),

blue_body=int(significant_stats[9].split(" of ")[0]),

red_body_attempt=int(significant_stats[8].split(" of ")[1]),

blue_body_attempt=int(significant_stats[9].split(" of ")[1]),

red_leg=int(significant_stats[10].split(" of ")[0]),

blue_leg=int(significant_stats[11].split(" of ")[0]),

red_leg_attempt=int(significant_stats[10].split(" of ")[1]),

blue_leg_attempt=int(significant_stats[11].split(" of ")[1]),

red_dist=int(significant_stats[12].split(" of ")[0]),

blue_dist=int(significant_stats[13].split(" of ")[0]),

red_dist_attempt=int(significant_stats[12].split(" of ")[1]),

blue_dist_attempt=int(significant_stats[13].split(" of ")[1]),

red_clinch=int(significant_stats[14].split(" of ")[0]),

blue_clinch=int(significant_stats[15].split(" of ")[0]),

red_clinch_attempt=int(significant_stats[14].split(" of ")[1]),

blue_clinch_attempt=int(significant_stats[15].split(" of ")[1]),

red_ground=int(significant_stats[16].split(" of ")[0]),

blue_ground=int(significant_stats[17].split(" of ")[0]),

red_ground_attempt=int(significant_stats[16].split(" of ")[1]),

blue_ground_attempt=int(significant_stats[17].split(" of ")[1]),

link=response.url,

)

yield fight

for fighter in fighter_links:

yield Request(fighter, callback=self.parse_fighter, dont_filter=False)

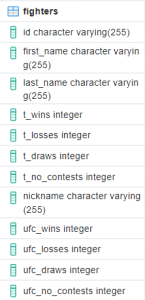

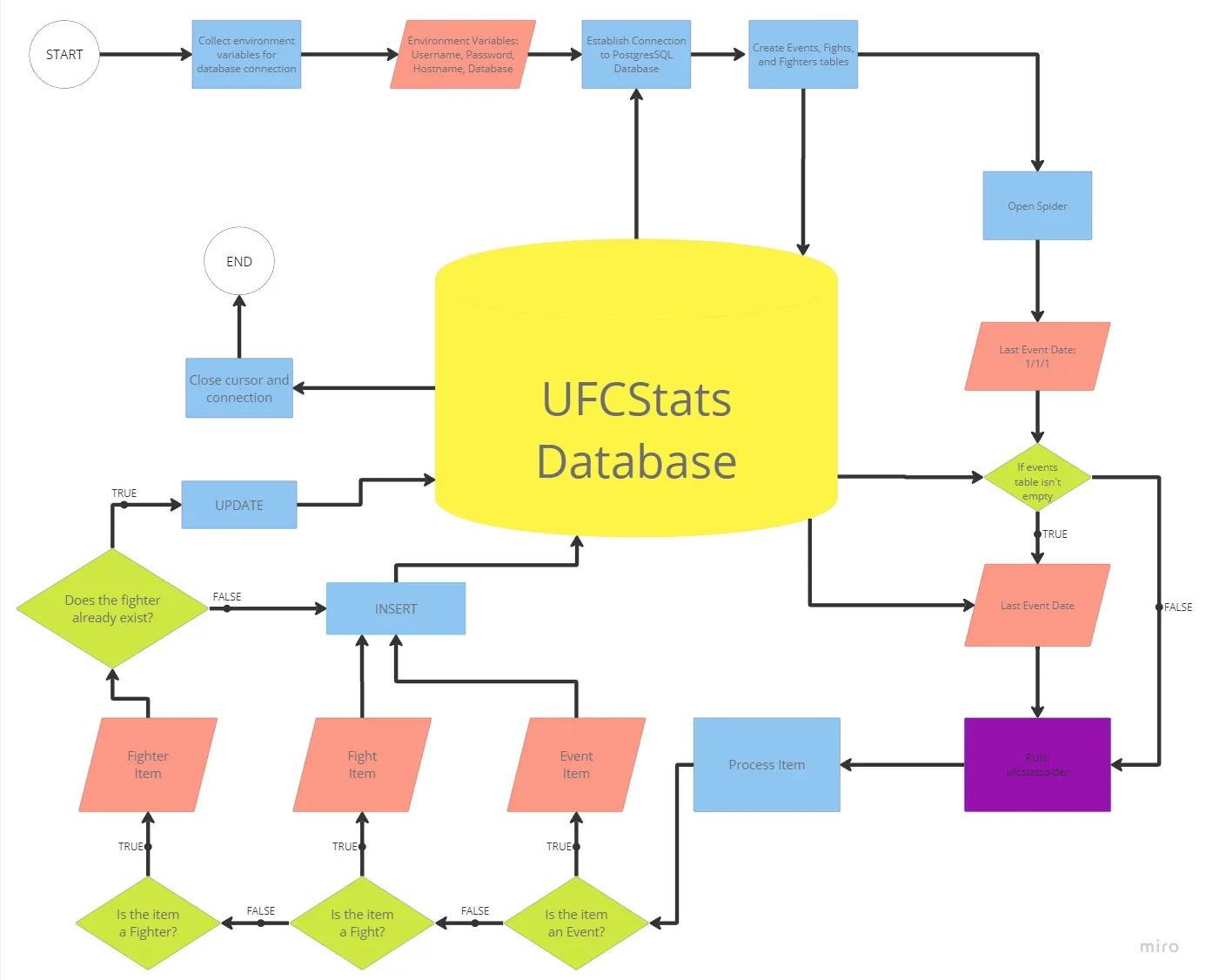

11) The final layer of the scraping process is the parsing of individual fighter pages.

12) We extract and clean several sections of this page separately.

13) Some basic info like height, reach, stance, and date of birth are sometimes not available and affect how we can parse the data. Special conditionals are implemented to deal with this.

14) We create the fighter object.

def parse_fighter(self, response):

date_format = "%b %d, %Y"

header = clean_text(response, '//*[@class="b-content__title"]//text()')

# Name is extracted this way for either a single name or a name with more than the first and last name.

first_name = None

last_name = None

if len(header[0].split()) < 2:

first_name = header[0]

else:

first_name, last_name = header[0].split()[0], header[0].split()[-1]

record = re.findall(r"\d+", header[1])

t_wins = int(record[0])

t_losses = int(record[1])

t_draws = int(record[2])

t_no_contests = 0

if len(record) > 3:

t_no_contests = int(record[3])

nickname = " ".join(

response.xpath('//*[@class="b-content__Nickname"]//text()').get().split()

)

basic_info = clean_text(

response,

'//*[@class="b-list__info-box b-list__info-box_style_small-width js-guide"]//text()'

)

# The height, reach, stance, and date of birth are not always present.

height = None

reach = None

stance = None

date_of_birth = None

if basic_info[1] != "--":

height = convert_height(basic_info[1])

if basic_info[5] != "--":

reach = int(basic_info[5].replace('"', ""))

if basic_info[7] in ["Orthodox", "Southpaw", "Switch"]:

stance = basic_info[7]

if basic_info[-1] != "--":

date_of_birth = datetime.strptime(

" ".join(basic_info[-1].split()), date_format

)

career_stats = clean_text(

response,

'//*[@class="b-list__info-box b-list__info-box_style_middle-width js-guide clearfix"]//text()',

)

# This extracts all of the results from the fighter's fights under the UFC banner or past promotions that were bought by the UFC.

ufc_results = clean_text(response, '//*[@class="b-flag__text"]//text()')

fighter = Fighter(

id=response.url.split('/')[-1],

first_name=first_name,

last_name=last_name,

t_wins=t_wins,

t_losses=t_losses,

t_draws=t_draws,

t_no_contests=t_no_contests,

nickname=nickname,

ufc_wins=ufc_results.count("win"),

ufc_losses=ufc_results.count("loss"),

ufc_draws=ufc_results.count("draw"),

ufc_no_contests=ufc_results.count("nc"),

height=height,

reach=reach,

stance=stance,

date_of_birth=date_of_birth,

sig_strike_landed=float(career_stats[2]),

sig_strike_acc=int(career_stats[4].replace("%", "")),

sig_strike_abs=float(career_stats[6]),

strike_def=int(career_stats[8].replace("%", "")),

takedown_avg=float(career_stats[10]),

takedown_acc=int(career_stats[12].replace("%", "")),

takedown_def=int(career_stats[14].replace("%", "")),

sub_avg=float(career_stats[16]),

link=response.url,

)

yield fighter

15) parse_events(), parse_fights(), and parse_fighters() run recursively until all requests are completed.

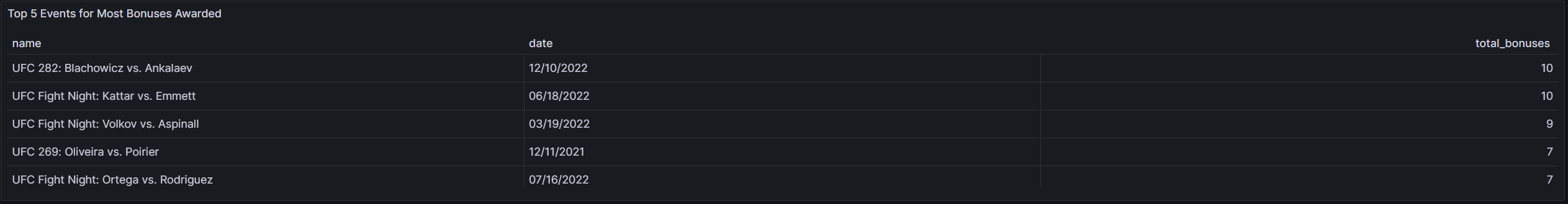

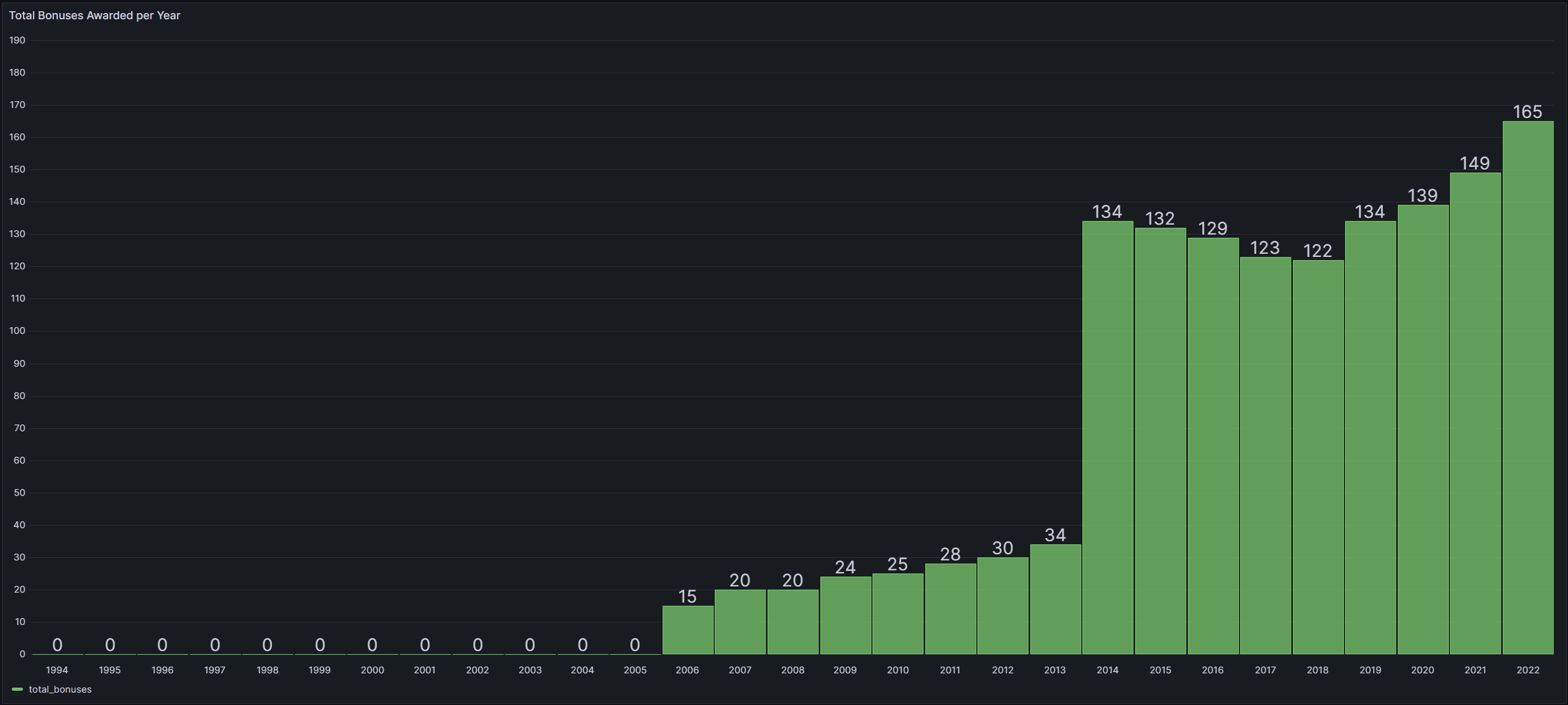

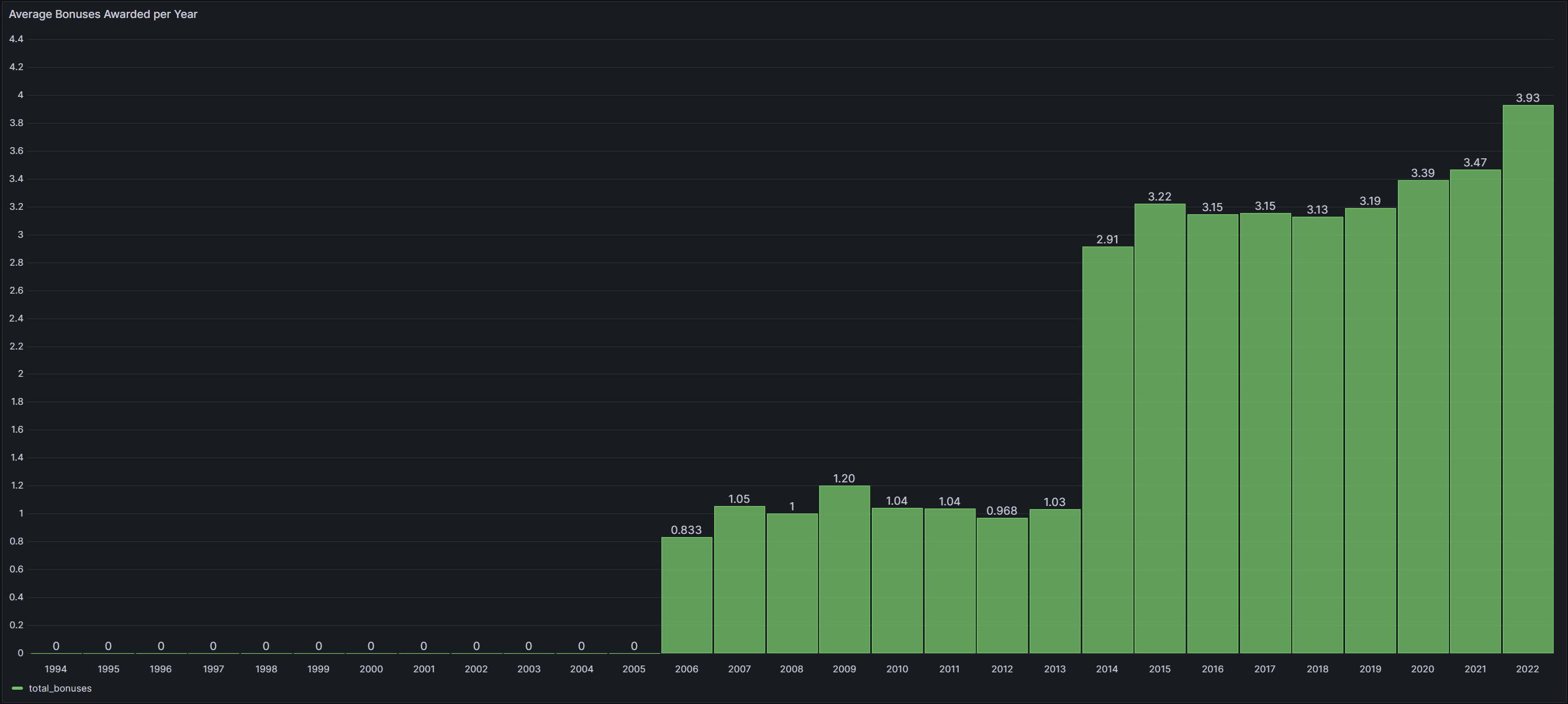

These are some of the analytical visualizations I made in Grafana and the SQL queries written to produce them.

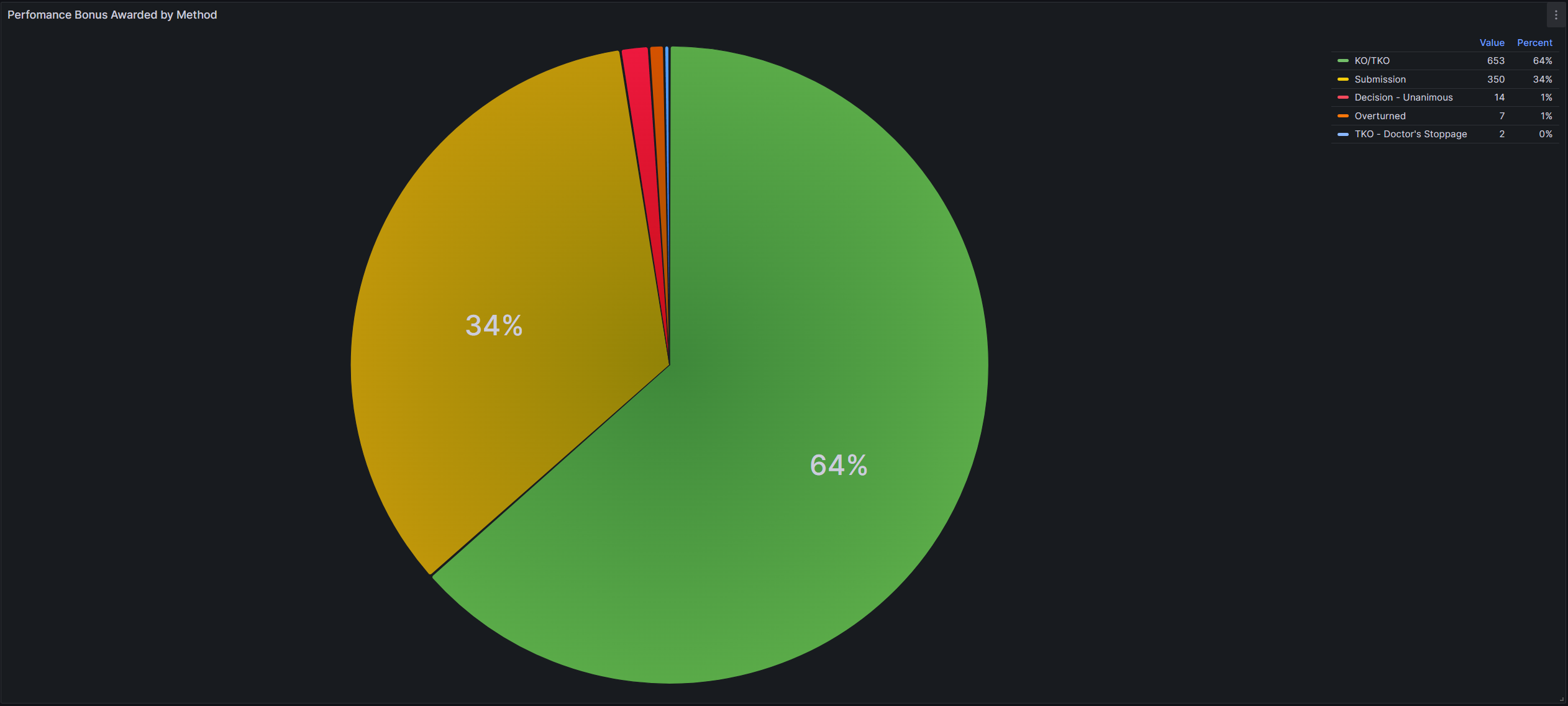

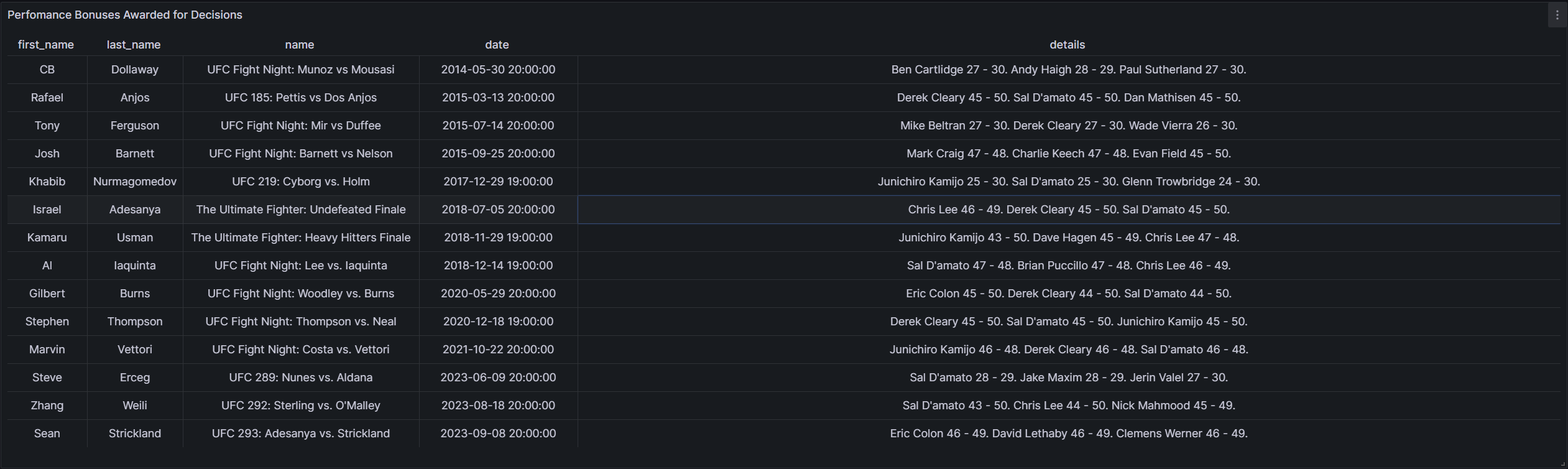

This is a simple analysis on how the UFC has awarded “Perfomance of the Night” or “Fight of the Night” bonuses over time.

SELECT e.name, TO_CHAR(e.date,'mm/dd/yyyy') date, (COUNT(CASE WHEN f.perf_bonus THEN 1 END) + COUNT(CASE WHEN f.fight_bonus THEN 1 END)) as total_bonuses

FROM events as e

JOIN fights as f

ON e.id = f.event_id

GROUP BY e.name, e.date

ORDER BY total_bonuses desc

LIMIT 5

WITH bonus_per_year AS

(SELECT e.name, DATE_PART('year', e.date) as year, (COUNT(CASE WHEN f.perf_bonus THEN 1 END) + COUNT(CASE WHEN f.fight_bonus THEN 1 END)) as total_bonuses

FROM events as e

JOIN fights as f

ON e.id = f.event_id

GROUP BY e.name, e.date

ORDER BY total_bonuses desc

)

SELECT SUM(total_bonuses) as total_bonuses, year

FROM bonus_per_year

GROUP BY year

HAVING YEAR != date_part('year', CURRENT_DATE)

ORDER BY year ASC

WITH bonus_per_year AS

(SELECT e.name, DATE_PART('year', e.date) as year, (COUNT(CASE WHEN f.perf_bonus THEN 1 END) + COUNT(CASE WHEN f.fight_bonus THEN 1 END)) as total_bonuses

FROM events as e

JOIN fights as f

ON e.id = f.event_id

GROUP BY e.name, e.date

ORDER BY total_bonuses desc

)

SELECT AVG(total_bonuses) as total_bonuses, year

FROM bonus_per_year

GROUP BY year

HAVING YEAR != date_part('year', CURRENT_DATE)

ORDER BY year ASC

SELECT method, COUNT(*)

FROM fights

WHERE perf_bonus = True

GROUP BY method

SELECT fr.first_name, fr.last_name, e.name, e.date, f.details

FROM fights as f

JOIN events as e

ON e.id = f.event_id

JOIN fighters as fr

ON fr.id = f.winner_id

WHERE f.perf_bonus = True

AND f.method LIKE '%Decision%'

ORDER BY e.date

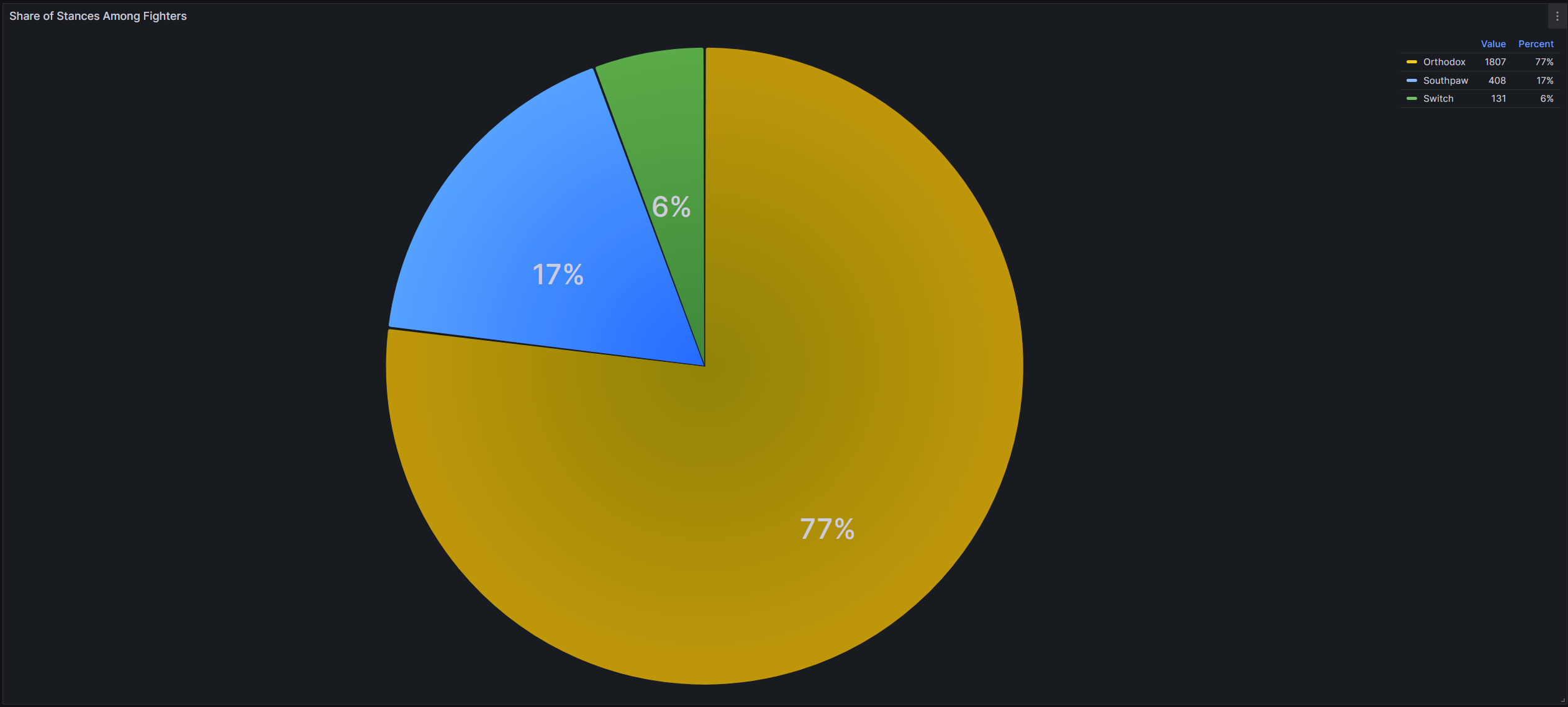

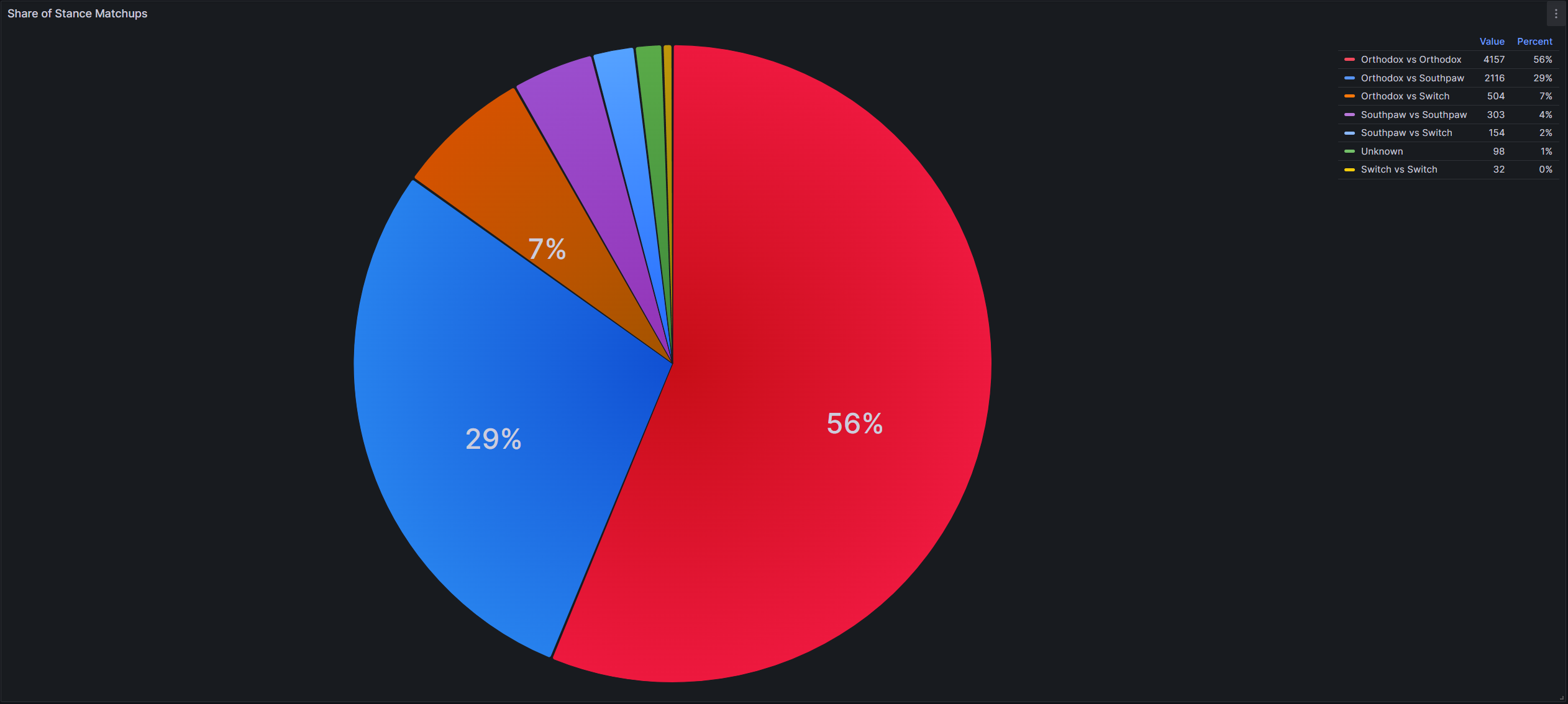

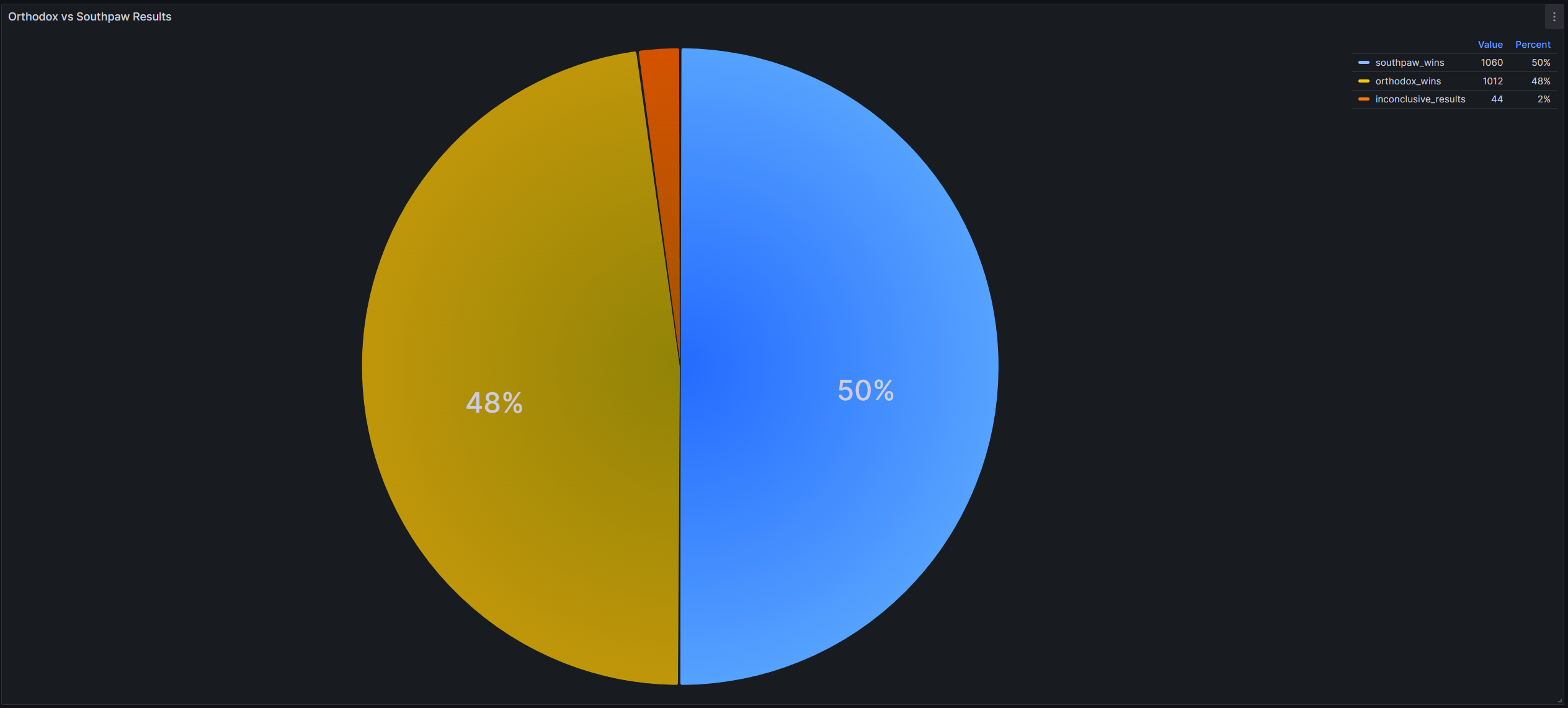

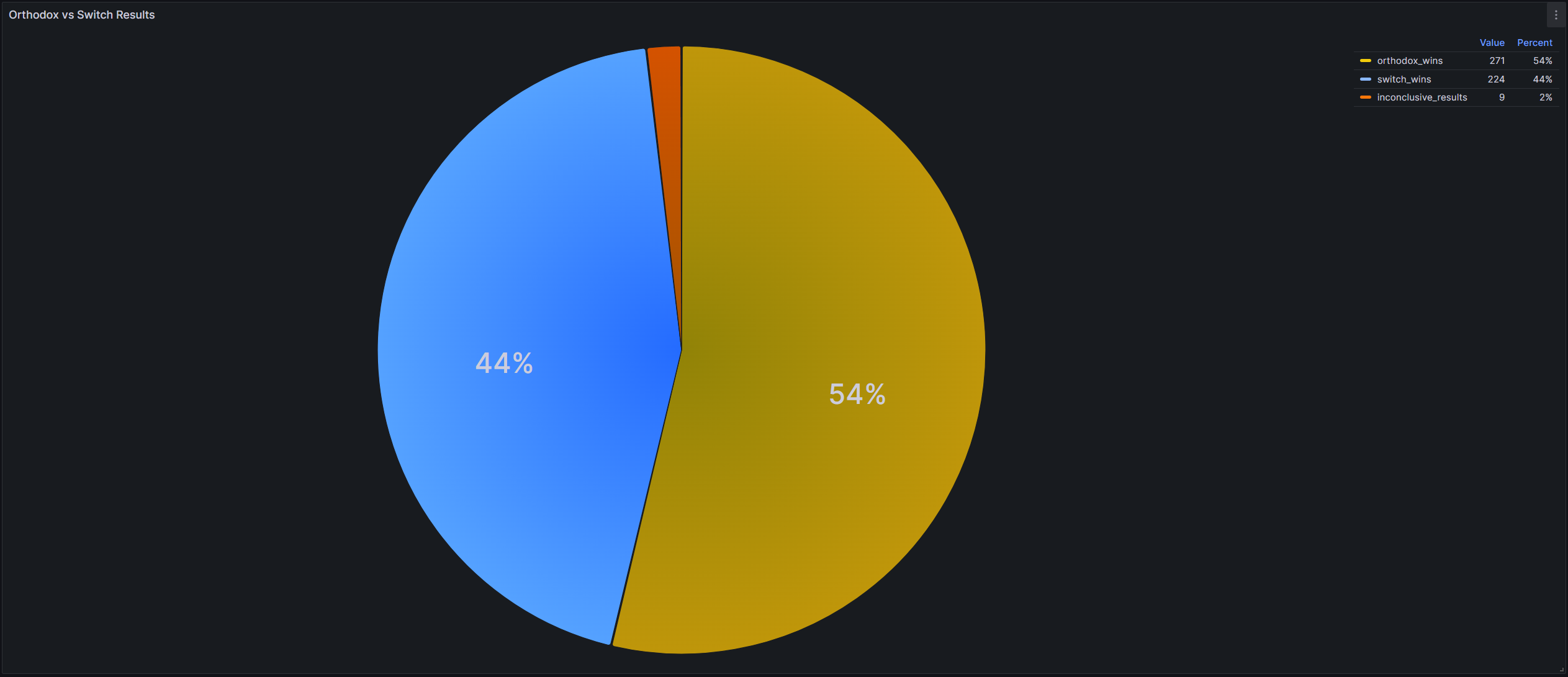

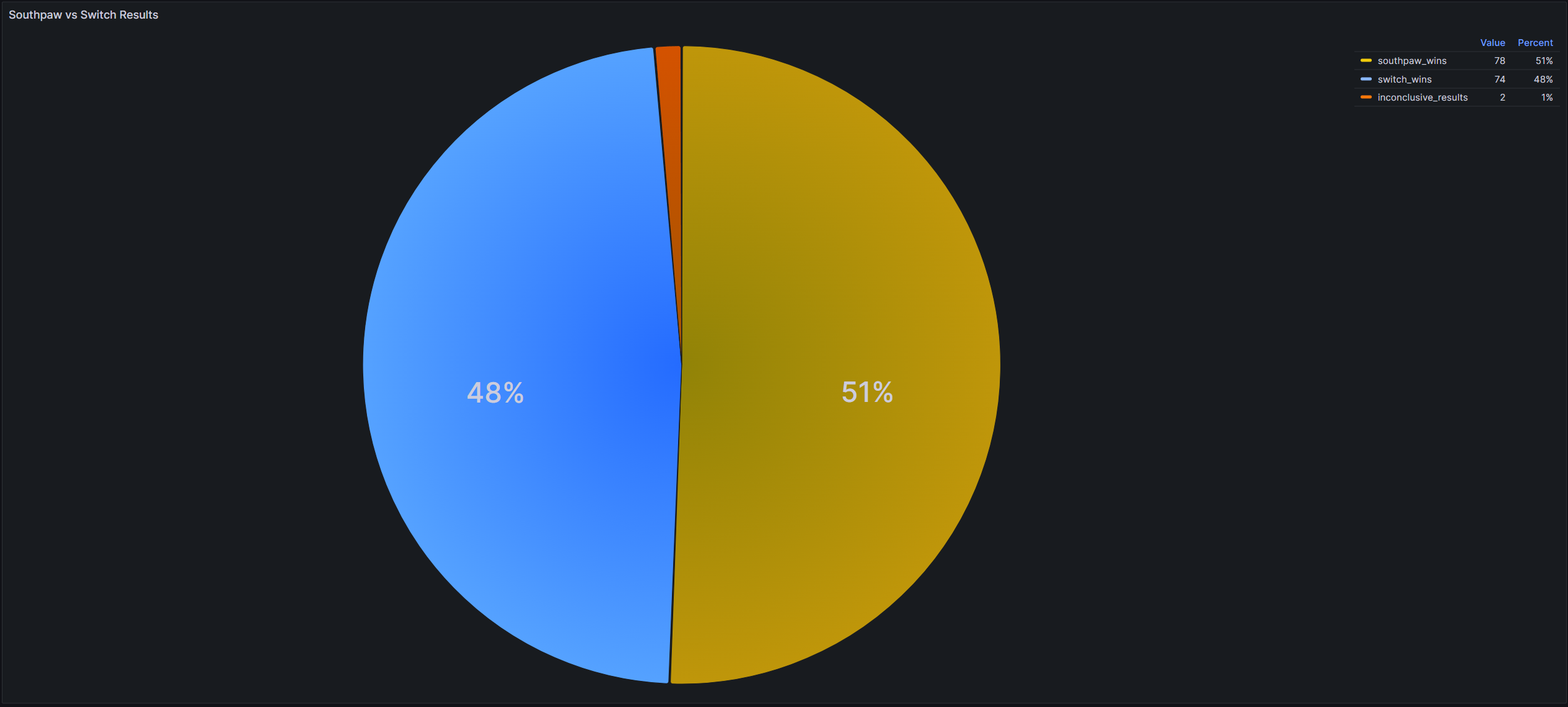

This is an analysis of what stances fighters use and how effective they are against each other.

SELECT stance, COUNT(*)

FROM fighters

GROUP BY stance

HAVING stance IS NOT NULL

SELECT (CASE

WHEN fr1.stance = 'Orthodox' AND fr1.stance = fr2.stance THEN 'Orthodox vs Orthodox'

WHEN (fr1.stance = 'Orthodox' AND fr2.stance = 'Southpaw') OR (fr1.stance = 'Southpaw' AND fr2.stance = 'Orthodox') THEN 'Orthodox vs Southpaw'

WHEN fr1.stance = 'Southpaw' AND fr1.stance = fr2.stance THEN 'Southpaw vs Southpaw'

WHEN fr1.stance = 'Switch' AND fr1.stance = fr2.stance THEN 'Switch vs Switch'

WHEN (fr1.stance = 'Orthodox' AND fr2.stance = 'Switch') OR (fr1.stance = 'Switch' AND fr2.stance = 'Orthodox') THEN 'Orthodox vs Switch'

WHEN (fr1.stance = 'Southpaw' AND fr2.stance = 'Switch') OR (fr1.stance = 'Switch' AND fr2.stance = 'Southpaw') THEN 'Southpaw vs Switch'

WHEN fr1.stance IS NULL OR fr2.stance IS NULL THEN 'Unknown'

END) as stance_matchup, COUNT(*)

FROM fights f

INNER JOIN fighters fr1

ON f.red_id = fr1.id

INNER JOIN fighters fr2

ON f.blue_id = fr2.id

GROUP BY stance_matchup

SELECT (CASE

WHEN (fr1.stance = 'Orthodox' AND fr2.stance = 'Southpaw') OR (fr1.stance = 'Southpaw' AND fr2.stance = 'Orthodox') THEN 'Orthodox vs Southpaw'

END) as stance_matchup,

SUM(CASE

WHEN (fr1.id = f.winner_id) AND (fr1.stance = 'Orthodox') THEN 1

WHEN (fr2.id = f.winner_id) AND (fr2.stance = 'Orthodox') THEN 1

END) as orthodox_wins,

SUM(CASE

WHEN (fr1.id = f.winner_id) AND (fr1.stance = 'Southpaw') THEN 1

WHEN (fr2.id = f.winner_id) AND (fr2.stance = 'Southpaw') THEN 1

END) as southpaw_wins,

SUM(CASE

WHEN f.winner_id IS NULL THEN 1

END) as inconclusive_results

FROM fights f

INNER JOIN fighters fr1

ON f.red_id = fr1.id

INNER JOIN fighters fr2

ON f.blue_id = fr2.id

WHERE (CASE

WHEN (fr1.stance = 'Orthodox' AND fr2.stance = 'Southpaw') OR (fr1.stance = 'Southpaw' AND fr2.stance = 'Orthodox') THEN 'Orthodox vs Southpaw'

END) IS NOT NULL

GROUP BY stance_matchupis Poetry' );

SELECT (CASE

WHEN (fr1.stance = 'Orthodox' AND fr2.stance = 'Switch') OR (fr1.stance = 'Switch' AND fr2.stance = 'Orthodox') THEN 'Orthodox vs Switch'

END) as stance_matchup,

SUM(CASE

WHEN (fr1.id = f.winner_id) AND (fr1.stance = 'Orthodox') THEN 1

WHEN (fr2.id = f.winner_id) AND (fr2.stance = 'Orthodox') THEN 1

END) as orthodox_wins,

SUM(CASE

WHEN (fr1.id = f.winner_id) AND (fr1.stance = 'Switch') THEN 1

WHEN (fr2.id = f.winner_id) AND (fr2.stance = 'Switch') THEN 1

END) as switch_wins,

SUM(CASE

WHEN f.winner_id IS NULL THEN 1

END) as inconclusive_results

FROM fights f

INNER JOIN fighters fr1

ON f.red_id = fr1.id

INNER JOIN fighters fr2

ON f.blue_id = fr2.id

WHERE (CASE

WHEN (fr1.stance = 'Orthodox' AND fr2.stance = 'Switch') OR (fr1.stance = 'Switch' AND fr2.stance = 'Orthodox') THEN 'Orthodox vs Switch'

END) IS NOT NULL

GROUP BY stance_matchup

SELECT (CASE

WHEN (fr1.stance = 'Switch' AND fr2.stance = 'Southpaw') OR (fr1.stance = 'Southpaw' AND fr2.stance = 'Switch') THEN 'Southpaw vs Switch'

END) as stance_matchup,

SUM(CASE

WHEN (fr1.id = f.winner_id) AND (fr1.stance = 'Southpaw') THEN 1

WHEN (fr2.id = f.winner_id) AND (fr2.stance = 'Southpaw') THEN 1

END) as southpaw_wins,

SUM(CASE

WHEN (fr1.id = f.winner_id) AND (fr1.stance = 'Switch') THEN 1

WHEN (fr2.id = f.winner_id) AND (fr2.stance = 'Switch') THEN 1

END) as switch_wins,

SUM(CASE

WHEN f.winner_id IS NULL THEN 1

END) as inconclusive_results

FROM fights f

INNER JOIN fighters fr1

ON f.red_id = fr1.id

INNER JOIN fighters fr2

ON f.blue_id = fr2.id

WHERE (CASE

WHEN (fr1.stance = 'Switch' AND fr2.stance = 'Southpaw') OR (fr1.stance = 'Southpaw' AND fr2.stance = 'Switch') THEN 'Southpaw vs Switch'

END) IS NOT NULL

GROUP BY stance_matchup